Disclaimer (You can ignore this part and start with the next section.)

Why am I writing these blogs? Especially since, as my brother told me the other day, there’s a good chance that no one is going to read this. I am a strong believer in the Feynman technique. I like to improve my understanding of the things I learn by teaching them to others (whether it is to an actual audience or an imaginary one). So here I am trying to grasp Reinforcement Learning for the first time. I will be explaining it through a set of three blogs. If you are an actual human reading this and you found these materials helpful, contact me and tell me about it or even better share it on LinkedIn to spread the knowledge. Have fun learning!

What is Reinforcement Learning?

What is Reinforcement Learning? People are usually familiar with supervised or unsupervised learning but not RL (often referred to as semi-supervised learning). In Reinforcement Learning, we choose an action using a policy and some data to maximize the expected long-term reward (all these terms will be explained). This might sound like supervised learning but it is different. In RL, we cannot simply learn a function that maps the data to the action directly (by relying on labels from a knowledgeable supervisor), because we don’t know the best action until we see the future play out.

Key Idea

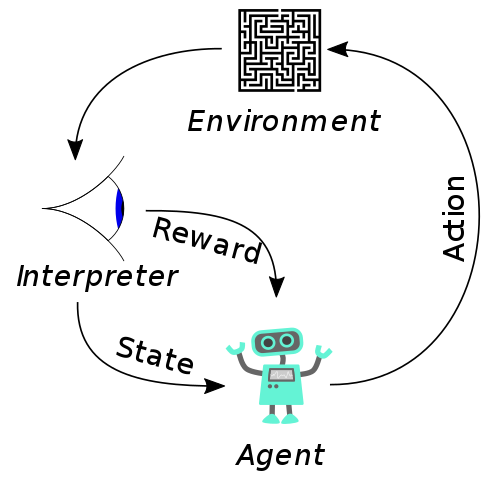

Throughout our lives, we learn different skills, even as babies, by interacting with our environment—whether it’s riding a bike for the first time or playing a musical instrument. We are always attuned to how our environment responds to our actions, adjusting our behaviour to achieve better results and reach our goals. Simply put, we interact with the world, experience the results, and update our actions based on those outcomes. The approach we explore in this tutorial, reinforcement learning (RL), uses the same logic. Through RL, an agent interacts with its environment, receives feedback, and learns to make better decisions to maximize its rewards.

Explaining the elements of Reinforcement Learning

State

It represents a snapshot of the environment at a specific point in time. It contains all the relevant information needed for the agent to decide on its action.

Policy

It is the transition function that maps the perceived state to the action to be taken by the agent. It defines the behavior of the agent at a given time. The goal is to find a policy that maps the current state to the action that maximizes the total reward. This policy function is usually stochastic, relying on a probability distribution for its action space. It also follows the Markovian property, where future states of the environment depend only on the previous ones and the action taken, not on the sequence of states and actions that preceded it. In mathematical terms, this is expressed as:

\[P(s_{t+1} \mid s_t, a_t) = P(s_{t+1} \mid s_1, a_1, s_2, a_2, \ldots, s_t, a_t)\]where $( s_t )$ is the current state, $( a_t )$ is the current action, and $( s_{t+1} )$ is the next state.

Reward

The reward signals if an event is good or bad for the agent by following a certain action. Rewards represent the immediate desirability of states following an action.

Value function

The value function represents the total amount of reward accumulated over the future. The main objective of an agent is to maximize the accumulated total reward over the long run of an episode. Rewards are given directly by the environment, but values must be estimated. Efficient value estimation is the core problem RL tries to improve.

Episode

The sequence of events is defined by actions and states until the agent gets to a terminal state (the end of the episode). In a game, an episode ends when the player finally defeats the boss. This can take a long time to reach that point or it can be quite fast if we have a good policy.

Model (optional)

It tends to learn the behavior of the environment without waiting for an actual outcome. Given a state and action, the model will predict, for example, the next state or the next action to take. Methods that rely on such models are called model-based, whereas the opposite are model-free methods. Model-based methods learn through trial and error to effectively model the environment.

Trade-off: exploration and exploitation

One of the key challenges in RL is balancing between exploration and exploitation. Exploration refers to the agent’s strategy of exploring new actions or states that it hasn’t extensively experienced before. This allows the agent to gather more information about the environment and potentially discover better long-term strategies. Without exploration, the agent might settle for suboptimal strategies based solely on its current policy. Exploitation, on the other hand, involves the agent choosing actions that it believes are currently the best based on its current policy. This maximizes short-term rewards by choosing actions that are known to be effective.

Example (Digging for Gold): The strategy of hitting the center “easy” blocks leads to the discovery of a small quantity of gold instead of exploring other paths that may lead to better outcomes. We aim to balance between exploration and exploitation to achieve optimal long-term performance. Usually, techniques like ε-greedy methods are used.

ε-greedy exploration

When deciding between actions, we randomly explore other actions by following a probability distribution of ε instead of always picking the best optimal action according to the current policy. Adding randomness results in exploring other strategies more frequently.

TD (Temporal difference learning)

Temporal Difference (TD), is undoubtedly the most central idea in Reinforcement learning. TD is a combination of both Monte Carlo and dynamic programming. Like Monte Carlo, TD learns directly from experience without needing to model the environment’s dynamics. Like DP, TD uses bootstrapping to estimate the values of subsequent states to determine the value of the current state. Essentially, it involves leveraging “estimated” information from future states to improve our understanding of the current state without waiting for the “actual” outcome.

Both TD and Monte Carlo use experience following some policy $\pi$ to update their estimate for value $V(S_t)$. Whereas MC methods need to wait until the end of the episode to determine the total return (or Reward $R$) to predict $V(S_t)$, TD on the other hand just wait until the next time step $t+1$ to form a target by updating $R_{t+1}$ and estimating $V(S_{t+1})$. We call this TD(0) or one-step TD where the target is based solely on the next step $t+1$ outcome. This is a special case of TD($\gamma$) with $\gamma$ time steps. In a nutshell, the Monte Carlo approach requires us to wait for the final outcome to estimate the current state because we don’t know yet the “true return” of that episode. On the other hand, TD waits for the $\gamma$ next steps or simply the next step $t_1$.

\[V(S_t) \leftarrow V(S_t) + \alpha \left[ (R_{t+1} + \gamma V(S_{t+1}) ) - V(S_t) \right]\]$V(S_t)$ represents the value function for current state $S_t$ that estimate .

$\alpha$ is the learning rate (a positive constant).

$R_{t+1}$ is the immediate reward received after transitioning from state $S_t$ to state $S_{t+1}$

$\gamma$ is the discount factor (a value between 0 and 1) that accounts for future rewards.

DP methods try to solve problems by breaking them down into smaller subproblems and solving those subproblems first. Like DP, TD uses bootstrapping to update estimates based on other estimates—to iteratively improve our understanding of the overall problem. The future reward $v_\pi(S_t)$ from the current state $S_t$ and following the policy $\pi$ is the current reward plus the next step future reward $v_\pi(S_{t+1})$. Note that here the current reward is actually $R_{t+1}$ and not $R_t$, because it is the observed reward from transitioning from $S_t$ to $S_{t+1}$ using a policy.

\[v_\pi(S_t) = \mathop{\mathbb{E}} [G_t | S_t] = \mathop{\mathbb{E}} [R_{t+1} + \gamma v_\pi(S_{t+1}) | S_t]\]The DP target is an estimate not because of the expected values $V(S_t)$ (provided by a model of the environment), but because the “actual or optimal” $v_\pi(S_{t+1})$ value is not known yet and therefore we rely on the estimate $V(S_{t+1})$.

\[(R_{t+1} + \gamma V(S_{t+1}) ) - V(S_t)\]We refer to this term as the TD error that we are going to be using to update $V(S_t)$ of the current state by multiplying it by a small step $\alpha$ value. This Equation can be understood by saying that we are getting the TD error between the current prediction or estimation $V(S_t)$ and the better estimation $R_{t+1} + \gamma V(S_{t+1})$, which depends on the next reward and the next estimation state. We say that it is a “better estimate” because it relies on the observed reward $R_{t+1}$ when we transition to the next time step.

Why TD is better?

TD methods have an advantage over DP methods because they do not require a model of the environment (of all its actual rewards and probability distributions). Also, TD is better than MC, because it can be implemented in an online, incremental fashion, as it can learn one guess from the next without waiting for an actual outcome (only waiting for a one-time step). On the other hand, MC must wait until the end of the episode, because only then is the return known. Some applications have very long episodes which can make training slower. This can be easily observed by comparing the code of TD methods like Q_learning / SARSA and Monte Carlo in the next blog.

And that’s a wrap! I hope that you got to learn the fundamentals of RL and that this has raised your curiosity to learn more about it. Please take a look at the next blog to learn more about how we use TD in famous RL algorithms like Q-learning and SARSA.